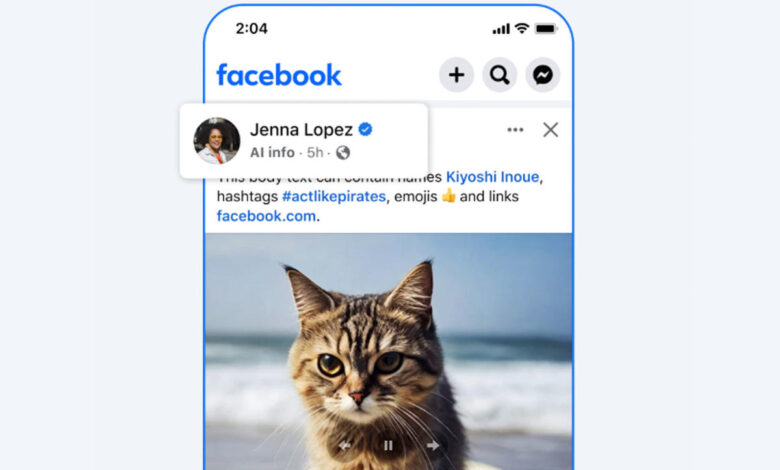

Meta is updating its “Made with AI” labels after widespread complaints from photographers that the company was mistakenly flagging non-AI-generated content. In an update, the company said that it will change the wording to “AI info” because the current labels “weren’t always aligned with people’s expectations and didn’t always provide enough context.”

The company the “Made with AI” labels earlier this year after criticism from about its “manipulated media” policy. Meta said that, like many of its peers, it would rely on “industry standard” signals to determine when generative AI had been used to create an image. However, it wasn’t long before photographers began noticing that Facebook and Instagram were applying the badge on images that been created with AI. According to tests conducted , photos edited with Adobe’s generative fill tool in Photoshop would trigger the label even if the edit was only to a “tiny speck.”

While Meta didn’t name Photoshop, the company said in its update that “some content that included minor modifications using AI, such as retouching tools, included industry standard indicators” that triggered the “Made with AI” badge. “While we work with companies across the industry to improve the process so our labeling approach better matches our intent, we’re updating the ‘Made with AI’ label to ‘AI info’ across our apps, which people can click for more information.”

Somewhat confusingly, the new “AI info” labels won’t actually have any details about what AI-enabled tools may have been used for the image in question. A Meta spokesperson confirmed that the contextual menu that appears when users tap on the badge will remain the same. That menu has a generic description of generative AI and notes that Meta may add the notice “when people share content that has AI signals our systems can read.”

Source link